Have you ever made a prediction and have been way off? That's typical as we humans are terrible about getting predictions right; everyone was expecting a stock market crash the last several years but we got COVID instead and the market rallied hard. As we start 2023, it feels like Artificial Intelligence (AI) is taking over the world as ChatGPT becomes a part of everyone's new year's resolution. Everyone is making their predictions and placing bets for this next tech explosion, and what might be around the corner, major job displacement, heightened social and economic tensions to an inevitability of no return: the rise of super intelligent machines over mankind's capacity, coined the "Singularity" by experts.

Some very wrong predictions by us: In the '50s and '60s, it was public opinion and hope for flying cars by now. Although we did make it on the moon by late '60s, predictions of landing on Mars will have to wait to be fulfilled in the 2030s by SpaceX. The last AI hype-cycle in the '80s believed AGI was around the corner but caused an AI winter instead.

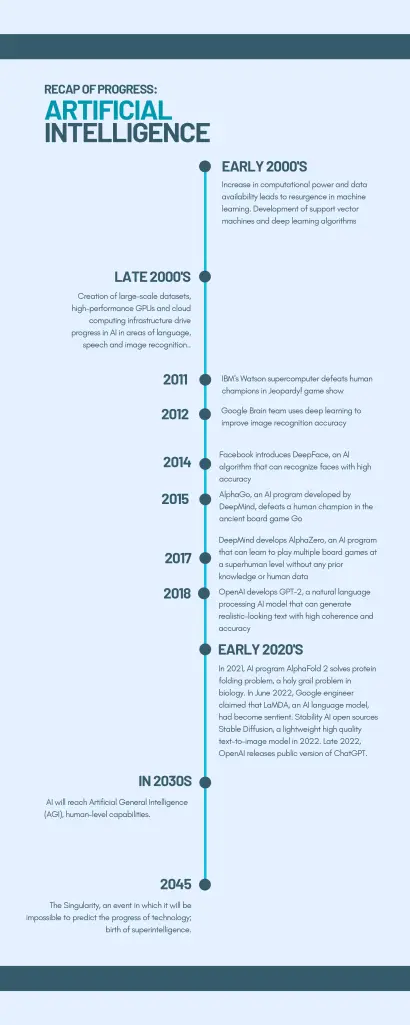

As a good reminder, our track record of predictions have been terrible. Let's revisit the the '80s and '90s where AI experts and academics laid claim that we would soon be creating Expert Systems (weak AI), Natural Language Processing (NLP), and achieving Artificial General Intelligence (AGI) in their time. With the cloud of hopium, they couldn't and didn't predict the AI winter, where technology of the time lacked infrastructure capacity, capability, and methods needed. It was only until recent decades, necessary accumulation of data and advancements in computing and methods, such as Neural Networks and Transformer architecture, and many impressive milestones, led us to the public release of large data models like Stability AI's Stable Diffusion and OpenAI's ChatGPT, where we have seen and measure significant progress.

A timeline of AI progress in last 20 years. Often times, it's hard to see the exponential trend when living year to year. AI has really came a long way.

Which leads us to the AI hype-cycle in 2023, where "AI" is the buzzword and have caused stock prices to explode at its mention. This time, it appears the overtone and current public opinion has more concerns of AI than hope, some opponents, like politician Andrew Yang, advocating for employment retraining programs and Universal Basic Income (UBI) due to ramp in job automation by AI; we got to see a taste of UBI at work during the pandemic with loose policies in receiving Stimulus and Unemployment Benefits and workers self reskilling and leaving service level and labor-intensive jobs. Proponents of AI, like inventor and futurist Ray Kurzweil, support UBI and predict we may need it as soon as 2030s since AGI will be here by then and the Singularity event of Superintelligence occurring in 2040s.

These troubles and predictions aren't that far away. What's our chance of being right this time?

Let's measure the ability of today's AI. I'll make some assumptions that you've been abusing ChatGPT, a form of weak AI, for the menial and mundane tasks (as you should!). Yes, Chatbots like ChatGPT and Bard and many other AI models, such as Stable Diffusion and Midjourney, have wowed us all in content generation, from writing stories, essays to creating high quality art and entertainment, in whichever styles and themes within seconds. It is unbelievable that this is free and openly available today as over a decade ago, the public and experts deemed the AI winter still persisted. But these AI models still haven't shown any signs of sentience like a former Google engineer has recently claimed. Current AI are black box models that require our creative and specific inputs and don't always output what we want. There's still a lot of post processing that needs to happen with these outputs to generate consistency and real use. As we will generate massive amounts of content using these tools, it's not certain if we'll actually become more productive as we may appear and feel, since all creative tasks and original work still require the human touch. It'll require even more of the human touch for your work to stand out.

Although creativity is still within the firm grasp of mankind, it may not be for long. There's a strong chance we are right this time in our prediction and should be anxious for the benefits and dangers to come. We've been wrong in our predictions before, often times because we're too early or too optimistic about a new technology and its capabilities, caught in the hype. AI may seem like a buzzword from today's era but it was also a buzzword in the '80s. When we're wrong, we tend to make claims out of alignment with the data trend, in which the most important trend is the exponential growth of technology and its capabilities. The current trend of technology has achieved narrow or weak AI, which has already surpassed human level of intelligence in many areas – Chess, Go, game theory, computational biology, and now we begin to see signs in literature, art and entertainment. Not quite Moore's Law but faster, the cost of AI model training has and will continue to decline exponentially as compute performance and energy costs go down; at the same time, future AI models' capabilities are increasing geometrically.

It may feel like we're on a linear path of progress in AI so far, reflecting on the last couple of decades but factoring in our achievements, progress and trajectory, we're in the beginning of the exponential curve. AGI and the Singularity might be even closer than furthest prediction estimates. Either way, as with technology, it's a double-edge sword – there will be good and bad, and there are plenty of smart people working to minimize the bad while trying to win the AI race. As for the good, the upside is unlimited, immortality even. Nonetheless, let's comeback to this point before 2030 where we can further discuss how bad we still are at predicting future technology.